Particle Swarm Optimization on a GPU

Train a Robust Classifier using a GPU

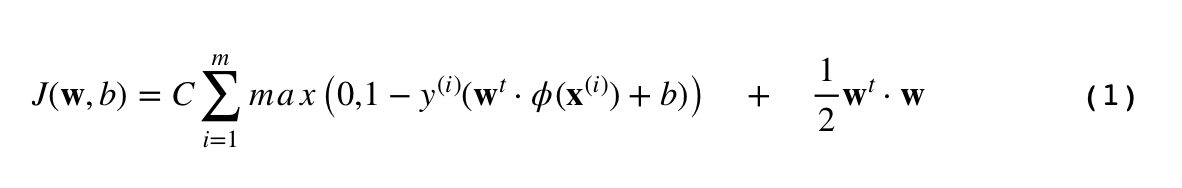

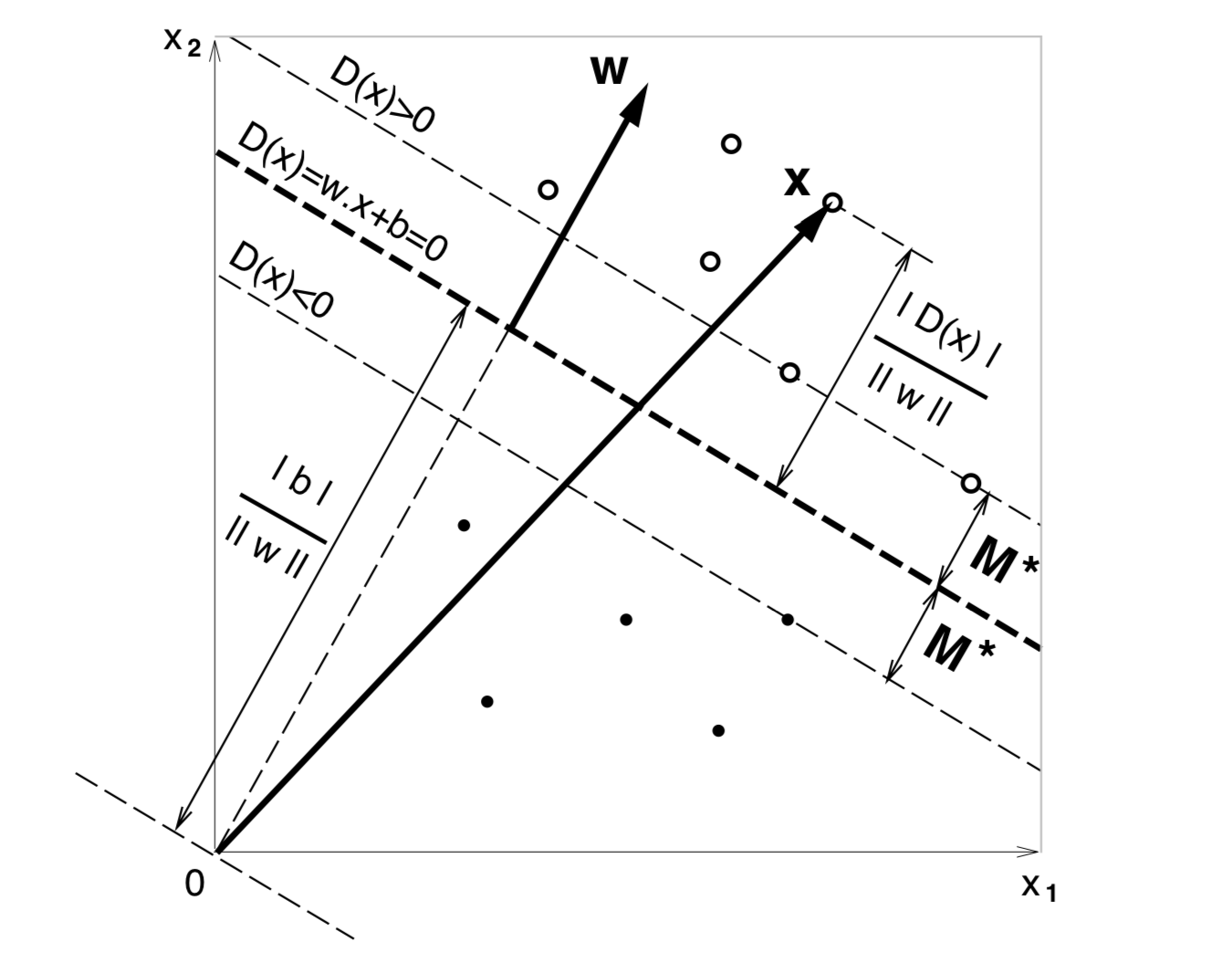

This notebook shows the optimization of a multi-class, linear support vector machine using a simulation based optimizer. Any simulation based optimizer could be used with the cuda kernel in this notebook. I used KernelML, my custom optimizer, in this example. The runtime for this script should be set to use the GPU: Runtime->Change runtime type.

The original SVM formulation can be found in this paper: Vapnik 1992. There have been advances to the robustness of the algorithm since then. Please see Robust Classifier 2019 section 6.1. The robust impementation looks very tedious to implement. If you are interested in implementing it, please consider emailing me as well as looking at KernelML’s documentation. Email: rohankotwani@gmail.com.

SVM are typically optimized using Langrage multiplers and quadratic programming. However, this optimization process might not be fast enough, and we want to utilize GPUs. :) We will optimize the SVM primal form with brute force methods. Actually, using a simulation based approach is not such a bad idea because the computational complexity of training an SVM is O(N³), where N is the number of data points.

The Iris dataset was used to test the feasibility of using a GPU for simulated based optimization. The dataset has 150 rows and 4 columns. It is a small dataset, but the optimization procedure seems to work. The optimizer's documentation can be found here and the cpu parallel implementation of this algorithm can be found here .